Coding a Simple Screen Capture App

An open source, simple app to make screen capture videos

Recently I wanted to make a few quick videos to demo software and to provide supplementary material for tutorials. I assumed there would be lots of free options available as this seemed to me a very simple and common task. However, a simple standalone program didn't leap out of Google's results, with many options requiring overly complex procedures such as signing up to an online account where you share you screen captures. Here's a quick list of what I tried:

-

Jing - *Free, requires signing up to a TechSmith/Jing account. I gave it a go and it looks and works great - until it came to exporting the videos. Jing only saves to .swf and if you want more formats you have to buy additional software such as

Camtasiafor $99 (Mac version). I realise money has to be made somewhere, but normally the line between free/trial software and commercial versions is disguised well, such that you assume the commercial version is packed full of features that will be of great benefit to you. Here however it's really obvious that the free software has been restricted - you can almost envisage the few lines of code that enable/disable ecoding to useful formats such as mp4.Whilst on the topic of TechSmith software, I noticed they sell

SnagItwhich seemed remarkably similar toJing- in fact I'm not sure what the differences are. -

RecordItNow - Free but KDE based and installing KDE on OSX is not the simplest of tasks.

-

Voila/Screencast Maker - Non Free.

-

Quicktime - This is probably the best free solution if you're on OSX. By selecting

File->New Screen Recordingyou can capture the entire desktop or selected areas. You are limited however with the video encoding options. Videos automatically save as .mov files and you cannot change frame rates etc.

The Plan

I have a lot of experience with Python and Qt. In Qt there's both a class to access the current screen properties and also the ability to grab the current screen, crop and paint on it then save the resultant image. Specifically, the app that follows is written around the two methods:

#returns QDesktopWidget

this_desktop = QApplication.desktop()

#grab desktop into a PixMap

px = QPixmap.grabWindow(this_desktop.winId())

Pseudo code for the app is as follows:

- Load GUI - options for entire desktop/selected area

- Enter fps

- Select

DesktoporSelected Area - If

Selected Areashow options for defining area:- Select

TopLeftandBottomRight - Option to toggle semi-opaque window over selected area

- Select

- Optional delay before capture

- Capture:

- Thread a process to handle the capture event

- Capture event saves the current desktop to image

- If selected area crop the QPixmap before saving

- Wait 1/fps before saving next image

- Main thread controls stop/start

- Encode:

- Use FFMpeg to encode the resultant image

- Provide ability to control FFMpeg options

The Interface

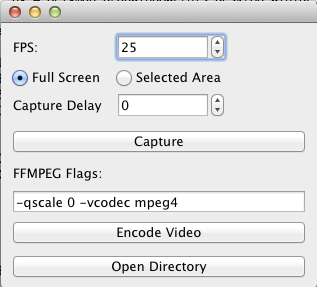

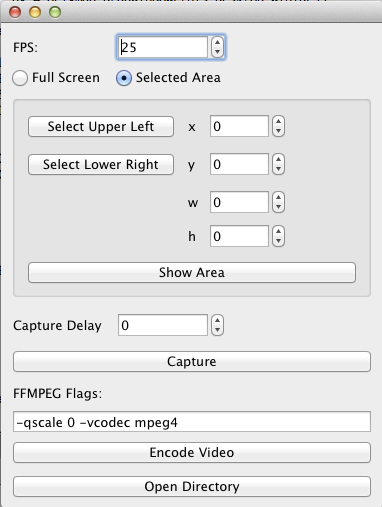

The aim was to keep the interface simple with minimal inputs to cover the pseudo code above. The resultant interface is shown below.

As indicated from the above, the only option that changes the appearance of the interface is the selection of the capture area.

Screen Capture

As stated above, at the core of the screen capture routine is the Qt method:

this_desktop = QApplication.desktop()

px = QPixmap.grabWindow(this_desktop.winId())

and the QPixmap can then be saved:

px.save(('screen.jpg', 'jpg')

When capturing the full desktop, the capturing process is straightforward and the above lines are used as is. However, when the capture area is not the full desktop but a region within it, we need both a method to define this area and a method to use the new area to save a proportion of the screen.

The simplest method to define the capture area is by the selection of the upper-left and the lower-right extremes. Allowing the user to input these points into QSpinboxes is simple - allowing the user to select these points with a mouse click is not so simple. The main problem is the desktop is outside the QMainwindow so mouse click events cannot be detected, in fact mouse clicks will take focus from our application. The somewhat ugly solution I adopted for to circumvent this issue was to create a semi-transparent widget, maximised to the size of the desktop. Mouse clicks on this widget can be detected and the location used to define the custom capture area. Below is the TransWindow class that defines the selected area:

class TransWindow(QWidget):

def __init__(self):

QWidget.__init__(self,None,Qt.Window)

self.layout = QGridLayout()

self.setLayout(self.layout)

self.showMaximized()

self.activateWindow()

self.raise_()

self.setWindowFlags(Qt.Window \

| Qt.WindowStaysOnTopHint \

| Qt.X11BypassWindowManagerHint \

| Qt.FramelessWindowHint )

screenGeometry = QApplication.desktop().availableGeometry();

self.setGeometry(screenGeometry)

self.setStyleSheet("QWidget { background-color: rgba(255,255,255, 5%); }")

def mousePressEvent(self, QMouseEvent):

self.hide()

xd = QApplication.desktop().screenGeometry().x() - QApplication.desktop().availableGeometry().x()

yd = QApplication.desktop().screenGeometry().y() - QApplication.desktop().availableGeometry().y()

self.pos = numpy.array([QMouseEvent.pos().x()-xd,

QMouseEvent.pos().y()-yd])

self.emit(SIGNAL("mouse_press()"))

The above window is shown by the main window when the user clicks Select Upper Left or Select Lower Right. On the mousePressEvent the TransWindow is hidden and the click position is stored. The compensates for the difference between QApplication.desktop().screenGeometry() and QApplication.desktop().availableGeometry(). This accounts for OS-level restricted regions of the desktop such as the top menu bar in OSX. After the position is stored, the window re-emits a mouse_press signal which is processed by the main window. I find colouring the window white with 5% opacity works well.

With the ability for the user to define the upper-left and lower-right positions by clicking on the screen, we need a way to use this information to only save the area selected. Fortunately, a very simple method of the QPixmap class can be used:

px2 = px.copy(selected_QRect)

In the above, a copy of the QPixmap containing the full desktop is made that is cropped to the dimensions of selected_QRect. Therefore, we only need to create a QRect containing the points selected by the user.

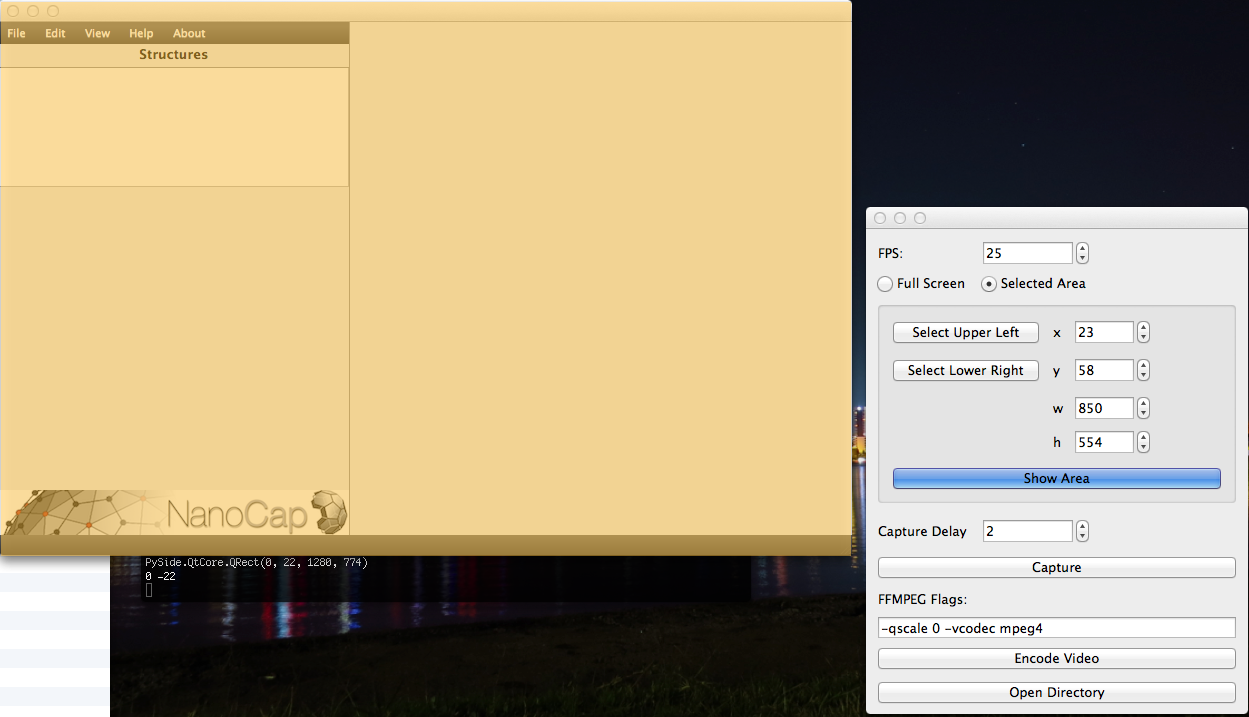

Once the area has been selected the user can toggle showing this area and make adjustments as shown below:

Threading

There's an obvious need for threading in this app and instinctively the capture routine would simply be placed on another thread. However, things are not this simple. The main Qt application runs in an event loop on the main GUI thread. The creation of new Qt objects, such as the QPixmap should be conducted by the main thread. For this reason, the thread that controls the capturing cannot explicitly create the QPixmap but instead emits signals that are caught by the main thread which then takes the screen shot. This functionality is shown below:

class SnapShots(QThread):

def __init__(self,main_window,fps=25):

QThread.__init__(self)

self.fps = fps

self.main_window = main_window

self.halt = False

self.queue = Queue.Queue()

def run(self,fps=None):

if(fps!=None):self.fps=fps

period = 1.0/self.fps

while not (self.halt):

st = time.time()

while not self.queue.empty():

pass

self.queue.put("capture")

self.emit(SIGNAL("capture()"))

td = time.time()-st

wait = period-td

if(wait>0):time.sleep(wait)

#empty the queue here (thread safe)

with self.queue.mutex:

self.queue.queue.clear()

class MainWindow(QMainWindow):

def __init__(self):

QMainWindow.__init__(self,None,Qt.WindowStaysOnTopHint)

.

.

.

#in the main window connect the capture signal

self.snap_shots = SnapShots(self,fps = 25)

self.connect(self.snap_shots, SIGNAL("capture()"),self.capture)

def capture(self):

app.processEvents()

if not self.snap_shots.queue.empty():

self.snap_shots.queue.get(0)

arrow = QPixmap(self.arrow_icon);

self.px = QPixmap.grabWindow(QApplication.desktop().winId())

painter = QPainter(self.px)

painter.drawPixmap(QCursor.pos(), arrow)

if(self.options.capture_area_sa.isChecked()):

self.px2 = self.px.copy(self.options.sa_x.value(),

self.options.sa_y.value(),

self.options.sa_w.value(),

self.options.sa_h.value())

else:

self.px2 = self.px

outfile = ('tmp_{}_{:'+self.fmt+'}.jpg').format(self.UID,self.capture_count)

self.px2.save(outfile, 'jpg')

self.capture_count+=1

FPS

Ideally, the snapshot process would be instantaneous and the images would be save every 1/fps seconds, where fps is defined by the user. However, the snapshot thread takes time to capture the screen. As such we have to compensate for the time as indicated in the SnapShots thread:

while not (self.halt):

st = time.time()

while not self.queue.empty():

pass

self.queue.put("capture")

self.emit(SIGNAL("capture()"))

td = time.time()-st

wait = period-td

if(wait>0):time.sleep(wait)

The above also shows a queue which is used so that we do not overload the main window with capture events. The queue also allows us to terminate the capture loop immediately.

Encoding

Once the images are saved, video encoding is straightforward using FFMpeg. The user can enter encoding options in a QLineEdit i.e. can specify the video codec using the -vcodec flag.

Summary

And that's it, a very short application (~300 lines). The code is available for download below.

Comments